Field Notes

Is Your Organization Measuring What You Think You’re Measuring?

The Root Cause team recently finished analyzing the organizational health and program quality of 12 organizations that provide youth career development, college access, and/or college persistence programs. These organizations are all members of the Peer Performance Exchange.

This year we changed the way we review outcome data. In the past we asked organizations to report based on our definitions of each outcome. This method was challenging and sometimes led to:

– Underreporting: organizations did not supply the data at all because they didn’t define the outcome in the same way

– Inexact reporting: organizations supplied their data regardless of the definition, meaning that we ran the risk of averaging data defined in different ways (fortunately we caught this during interviews!)

– Unclear validity: even if all the organizations use the same definition, the way they gather and verify the data could affect what they are actually measuring

Given these challenges, this year we asked organizations to provide their own definitions for the common outcomes and tell us how they verify the data. The results were eye-opening. It really highlighted two important things to consider when collecting and comparing outcomes:

– We cannot assume we all mean the same thing when we use specific words

– The way data is verified can affect (1) the validity of the data and (2) whether an organization is actually measuring what it wants to be measuring

Let’s look at one example: post-secondary education matriculation.

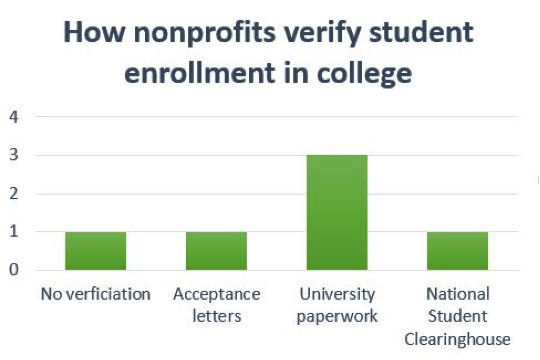

Half of the organizations we analyzed (six) reported on this outcome. In this case the definitions were quite close. All six organizations said that they were measuring how many students enroll in college. However, the sample of verification methods shown above tells us that they may actually be measuring slightly different things.

– One organization is not verifying enrollment at all – which means they are likely relying on students’ declaration of intention to go to college. With the growing recognition of “summer melt” (students who intend to go to college at the beginning of the summer but not enrolling in the fall) is a huge challenge for underrepresented students, this calls into question the 100% enrollment this organization reported. Instead of enrollment are they actually measuring students’ intention to go to college

– One organization is using acceptance letters as a proxy for enrollment – again the “summer melt” phenomenon could be affecting their data. This organization seems to be measuring acceptance rather than matriculation.

– Three organizations use university paperwork – class schedules, college email addresses, copies of enrollment forms that were sent to the college, etc. These forms have varying degrees of reliability, but are all certainly more reliable than the acceptance letters or no verification. Do these methods ensure that the student showed up for the first day of class?

– One organization uses the National Student Clearinghouse. While this is not possible for all organizations, it is certainly the most reliable way to track students matriculation and continued enrollment in college.

How is your organization verifying data it collects and reports? Take an honest look at your methods: are you really measuring what you want to be measuring? Are there ways that you can tighten up the data to ensure it is accurate? Or do you need to change the way you define the outcomes so you are crystal clear about what you are measuring?

Determining the best (and most reliable) way to measure some outcomes can be challenging. Root Cause can help. Check out our guide to building a performance measurement system building a performance measurement system.

With Us